This isn’t racism coming back to bite you in the ass. This is just plain old fascism which does not require a racism foothold to get started.

Fascism can generate its own racism foothold through its own means.

This isn’t racism coming back to bite you in the ass. This is just plain old fascism which does not require a racism foothold to get started.

Fascism can generate its own racism foothold through its own means.

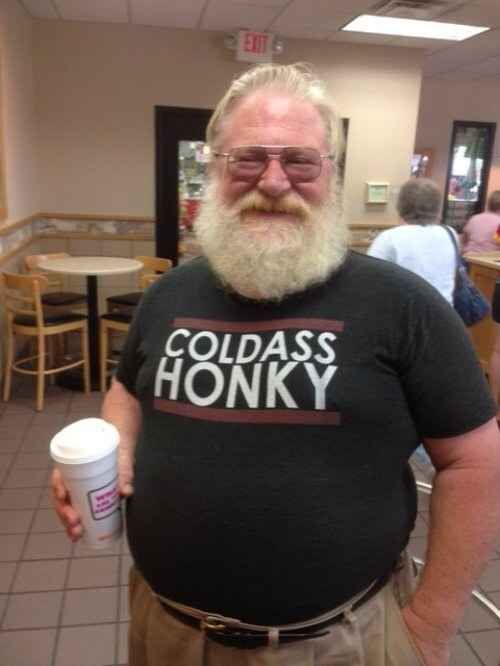

Cosplaying

You can’t fix stupid, it will only drag you down with it.

What’s the alternative? More republicans? We live in a shitty two party system.

Ah yes the: “Accountability is bad because then those held accountable will try and hide their actions” argument.

It’s not an ideal position to hold as it’s one that’s subservient to abuse and malicious intent

It is, just not for the pleb class.

Yeah but as of late when has things being illegal in the US actually mattered to those in positions of power?

The United States can’t manufacture shit so yeah, Canada should step up and prepare.

Likely just a tale.

Baking soda is basic, Which would imply that the chemical being used is acidic so that it’s neutralized with baking soda. This also assumes that they mix in such a way that baking soda actually makes contact with enough of that chemical to have any effect.

All of these are pretty big assumptions that are very easily wrong for many number of devices and chemicals.

I assume that the gitea instance itself was being hit directly, which would make sense. It has a whole rendering stack that has to reach out to a database, get data, render the actual webpage through a template…etc

It’s a massive amount of work compared to serving up static files from say Nginx or Caddy. You can stick one of these in front of your servers, and cache http responses (to some degree anyways, that depends on gitea)

Benchmarks like this show what kind of throughput you can expect on say a 4 core VM just serving up cached files: https://blog.tjll.net/reverse-proxy-hot-dog-eating-contest-caddy-vs-nginx/#10-000-clients

90-400MB/s derived from the stats here on 4 cores. Enough to saturate a 3Gb/s connection. And caching intentionally polluted sites is crazy easy since you don’t care if it’s stale or not. Put a cloudflair cache on front of it and even easier.

You could dedicate an old Ryzen CPU (Say a 2700x) box to a proxy, and another RAM heavy device for the servers, and saturate 6Gb/s with thousands and thousands of various software instances that feed polluted data.

Hell, if someone made it a deployable utility… Oof just have self hosters dedicate a VM to shitting on LLM crawlers, make it a party.

Even more reason why it should be released as a dump, and not walled in behind a website.

This is assuming aggressively cached, yes.

Also “Just text files” is what every website is sans media. And you can still, EASILY get 10+ MB pages this way between HTML, CSS, JS, and JSON. Which are all text files.

A gitea repo page for example is 400-500KB transferred (1.5-2.5MB decompressed) of almost all text.

A file page is heavier, coming in around 800-1000KB (Additional JS and CSS)

If you have a repo with 150 files, and the scraper isn’t caching assets (many don’t) then you just served up 135MB of HTMl/CSS/JS alongside the actual repository assets.

Fair fair. I missed that

They only have immunity because the laws aren’t enforced.

They don’t actually have immunity under the laws today. ICE are not law enforcement, they do not have the ability or jurisdiction to be law enforcement. Most of their actions are illegal under State and federal laws. However actual law enforcement refuses to enforce the laws in regards to ICE.

I can get a 50Gb/s residential link where I am, and have a whole rack of servers.

Sounds like a good opportunity to crowd fund thousands and thousands of common scrapeable instances that have random poisoning.

Low key win for kink communities.

Yeah but that was before you had billionaires of this size able to manipulate entire markets in this capacity.

Probably GitHub Copilot.

The rest just sucks.